IIPC Web Archiving Toolset Performance Testing at The British Library

The British Library is adopting the International Internet Preservation Consortium (IIPC) Toolset [1] - comprising the Web Curator Tool (WCT), NutchWax and the Open Source Wayback Machine (OSWM) for its evolving Web archiving operations, in anticipation of Legal Deposit legislation being introduced within the next few years. The aim of this performance-testing stage was to evaluate what hardware would be required to run the toolset in a production environment.

This article summarises the results of that testing with recommendations for the hardware platform for a production system.

To support current UK Web Archiving Consortium (UKWAC) [2] use, it is recommended that the archive is hosted on three machines. One will host the WCT [3] including its harvest agent, running up to five simultaneous gathers, the second will run NutchWax searching and the OSWM for viewing the archive and the third will be a Network Attached Storage server. This second machine will also perform daily indexing of newly gathered sites, executed during the night when the user access is likely to be low. On finishing large crawls, a bug was found in the WCT that requires fixing before the system can be accepted for operational use. This has been reported to the WCT developers. Testing has shown that using Network Attached Storage (NAS) with the IIPC components does not constitute a problem from a performance point of view. For more information about the conclusions drawn see the Recommendations section at the end of this article.

Testing Hardware

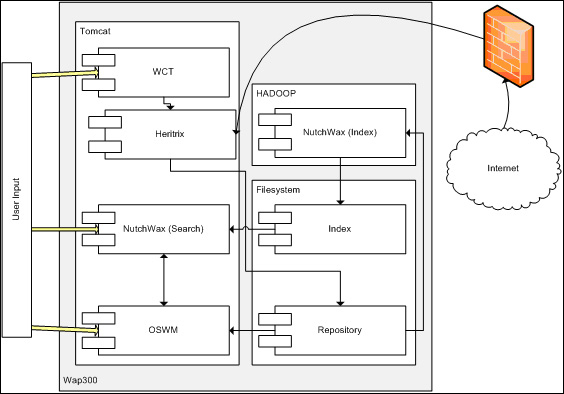

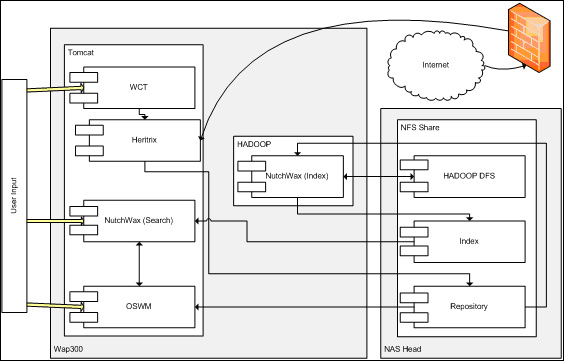

The testing was performed on a single machine, and only one test was performed at a time. The machine has a single 3 GHz Intel Xeon processor (featuring Hyperthreading) with 1 Gb of RAM and a 760 Gb hard disk. Figure 1 shows the component diagram for the testing hardware and software.

Figure 1: Component Diagram for the Testing Hardware and Software

The Web Curator Tool was tested in three ways:

- Gathering Web sites of different sizes

- Gathering several Web sites at once

- Multiple users reviewing a Web site simultaneously

For the gathering of Web sites of various sizes, the gathering, reviewing (i.e. looking at the gathered Web site to check the quality) and submission to the archive were all monitored. As expected the average inbound data was larger than average outbound data, but the average did not increase linearly with the size of the site, presumably because some sites might have more small pages, and hence might be limited by the politeness rules (one hit per 300 ms per server). There were no trends in the average memory or Central Processor Unit (CPU) usage (CPU load: 0.14 - 0.34, memory footprint: 90 - 240 Mb). Maximum memory footprint is 300 Mb for the 5 Gb crawl.

In summary, the 5 Gb Web site can be harvested comfortably on the hardware used, but performance of other tasks is affected during the end of the crawl when the archived sites are being moved on disk.

Both reviewing and submission require a large memory footprint (up to 537 Mb for submitting a 5 Gb site), but submission in particular is very quick - varying from a few seconds to four minutes for the 5 Gb crawl. Submission also loads the CPU.

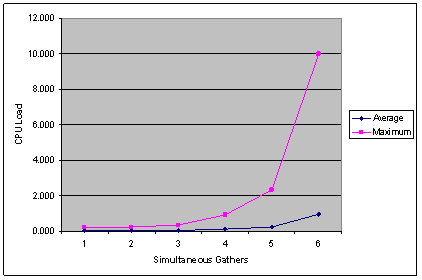

Performing multiple crawls simultaneously was also performed with a set of Web sites. The same Web sites were used throughout (i.e. first site in tests 1-6, second site in tests 2-6, etc) to allow some analysis of how running multiple searches affects gather times. For the multiple-gathers tests, only gathering was monitored, since multiple reviewing is covered in another test. Again there was no clear trend in memory footprint, with a maximum of less than 100 Mb. CPU load showed a clear trend however, with a sharp increase around five simultaneous crawls (see Figure 2).

Figure 2: CPU Load for Simultaneous Crawls

This implies that limiting the system to four or five simultaneous crawls is sensible to avoid times when the system becomes unresponsive. Six simultaneous gathers also had an impact on gather times. Gathering up to five sites had very little effect on gather times, but adding a sixth gather delayed all six of the gathers, with gather times for the sites increasing by between 8% and 49% above the average of the previous runs.

As expected, inbound data transfer increases as the number of gathers increases; but even with six simultaneous gathers the average bandwidth is only 313 Kb/s, well below the 500 Kb/s bandwidth restriction that had been set in the WCT.

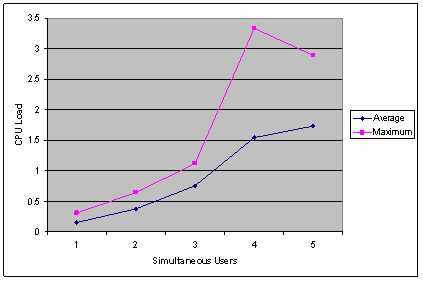

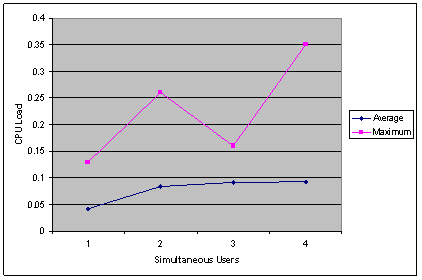

The final tests of the WCT involved persuading multiple users to review a site in the WCT simultaneously. During reviewing there was an upward trend of data transfer; but even with five people reviewing the site it did not reach significant levels. Throughout testing, data transfer (inbound and outbound) remained in the range 18-143 Kb/s. Average CPU load also increased with the number of users, and, with four or five simultaneous users, it reached levels that would interfere with gathers that were also being performed on the same machine. See Figure 3 for a graph of CPU load.

Figure 3. CPU load for multiple reviewers.

NutchWax Results

NutchWax was tested in three ways:

- Indexing Web sites of various sizes

- Searching Web sites of various sizes

- Multiple users searching a Web site simultaneously

When indexing the sites of various sizes, three stages were monitored: preparation (deleting old files from the HADOOP [4] file system and copying in the new ones), indexing and retrieving the index from HADOOP. The preparation had negligible effect on all the monitored metrics. Both indexing and retrieval show an upward trend in memory, disk bandwidth and CPU load, however the trend is not a good correlation to a linear approximation. This could be due to the complexity of a site being the driver of the metrics rather than just raw size. Sites with many more html pages or lots of links could increase the metrics over a similarly sized simple Web site. Both indexing and retrieval have a large memory footprint: indexing peaked at 432 Mb for the 5 Gb site, and retrieval at 382 Mb. CPU load was higher during indexing than retrieval with peak figures for the 5 Gb crawl being 2.4 and 0.38 respectively. It took just over 21 minutes to index the 5 Gb crawl, which implies that the testing hardware can comfortably support the current UKWAC activity.

Searching did not significantly load the server in any metric, and did not show a steady upward trend on the sizes tested. However average search times did increase on the sizes tested. The longest search time was around 0.1 seconds, and the average for 5 Gb was only 0.028 seconds, so the system should be able to support far larger indices than the ones we tested. More testing is required to determine how search times are affected on an index of 2Tb of archived material, as this is the order of magnitude the live system will need to support. However, we are currently unable to do this in a reasonable timeframe, as gathering that much data would take several months. The same is true of searching with multiple users, with the exception of six users which performed significantly more slowly. However, the system was under significant load during the initial rest period for that test which continues throughout testing, which could have affected the times recorded. With five users the system was managing a search every four seconds. This tallies well with the current average page hits of the UKWAC site, being one every 7.5 seconds on average (although peak traffic will occur during the day).

Open-Source Wayback Machine Results

The OSWM was tested in two ways:

- Viewing Web sites of various sizes

- Multiple users viewing an archived Web site simultaneously

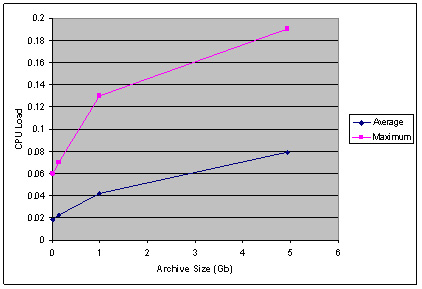

Viewing Web sites resulted in fairly low load across all metrics; however average CPU load increased with both the number of users and the size of the archive. The increase in CPU load appears to increase logarithmically, although fitting a logarithmic trend line to the data led to a poor (but better than linear) correlation, suggesting that a larger archive would not cripple performance (see Figure 4). This requires more testing once a larger archive is available.

Figure 4: CPU Load When Viewing Archives of Varying Sizes

The increase in average CPU load against number of users is more linear as more users lead to more requests and hence a higher load. During the testing the load per user was abnormally high, since users were trying to maximise the requests they generated rather than viewing, reading and exploring the archive. Therefore the results for a few users presented should be equivalent to a higher number of real world users. Figure 5 shows how the average and maximum CPU load varied with increasing numbers of users.

Figure 5: CPU Load with Varying Numbers of Users

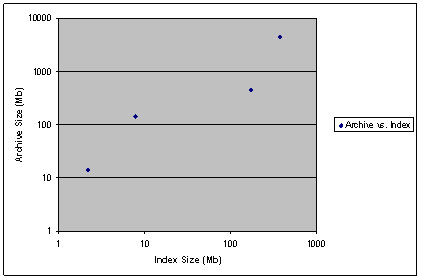

Index Size

The size of the index in relation to the archive is important to ensure that enough disk space is reserved for that purpose. In Figure 6, the archive size and the index size are compared for the four different test crawl sizes. A logarithmic scale is used to show the lower values more clearly. Index size varied between 5% and 39% of the size of the archive.

Figure 6: Comparing Index and Archive Sizes

Storage

We repeated all the single user tests for different sizes of sites using Network Attached Storage (NAS) to see how using storage which has to be accessed across the network would affect the performance of the system. NAS has several advantages: it requires fewer servers to manage large amounts of storage; is easily configured into RAID arrays for improved data resilience; and is a standard way of storing large volumes of data which is likely to fit well with a hosting provider. The disadvantage is that all information written to disk has to be transmitted across the network (which may have a detrimental effect on performance). It has been reported that HADOOP encounters difficulties when it is run using NAS over the Network File Protocol (NFS). The tests we performed allowed us to test that NutchWax (using HADOOP) would function correctly and to investigate the performance of using NAS for storage. Both the live index and archive (used in searching and viewing archived sites) were moved to the NAS, and in addition the HADOOP file system (used by NutchWax to create an index) was located on the NAS (see Figure 7).

Figure 7: Testing Hardware Configuration for NAS Tests

The metrics for the NAS tests were in some cases better than local storage and in some cases worse. There was no identifiable pattern to the differences, so we can only assume these relate to random fluctuations in usage caused by the combination of programs running at the time and other network traffic. In general, times using the NAS storage were better, although there were a couple of exceptions when the job took slightly longer using the NAS than local storage. We attribute the general trend towards faster execution to the NAS server having higher performance disks than the local server. In particular the indexing (which made the heaviest use of the NAS and was potentially problematic due to HADOOP not being designed to work with NAS) was significantly faster in all but one case, and was only one second slower in the anomalous case.

Recommendations

General

Memory use has been high throughout testing, as several of the programs suggest reserving a large amount of memory. It is recommended that each machine used holds at least 2 Gb of RAM; this will avoid lack of memory causing swapping and thereby significantly prolonging the processing of jobs.

All testing was performed on a single machine with a direct-attached hard disk. In operational use we will need access to a large amount of disk storage, and that will be provided through Network Attached Storage (NAS).

Bugs

A problem was found performing large crawls: a 5 Gb crawl works; a 10 Gb does not). The crawl completes successfully, but then times out during saving and is stuck in the 'stopping' state in the WCT. We believe we have tracked down the cause of the timeout and reported it to the WCT developers for fixing in the near future.

Gathering/Reviewing

Four or five gathers simultaneously per harvest agent will avoid too many problems due to overloading. Additional crawls will be automatically queued, so if more than five crawls are scheduled to occur simultaneously, some will be queued to be processed once others have finished. The average size of a site in the current UKWAC archive is 189 Mb, which should gather in around 1 hour and 20 minutes on average. Assuming five gathers simultaneously, a single machine of the same spec as the testing hardware would be able to support approximately 5 * 18 = 90 gathers per day, well above the current average of approximately 10. It is therefore fairly safe to assume that we can continue with a single gathering machine / harvest agent for the short-term future of small-scale, permissions-based crawling, with the option to introduce another harvest agent in the future if we significantly ramp-up gathering schedules. For the same machine to support reviewing in addition to gathering, it will be necessary to add a second processor or a dual core processor, as multiple gathering and reviewing simultaneously places a noticeable load on the processor.

Indexing

We currently collect around 3.5 Gb per day. It is estimated that we can index that in around 15 minutes on a similar specification machine to that used in the testing. It is therefore assumed that we can use the Wayback Machine for indexing; indexing each day's new ARC files during the night when the load on NutchWax and the OSWM for display is likely to be lighter. This arrangement is likely to continue throughout the permissions-based gathering, but will need to be reviewed when we begin performing broad crawls of the UK domain. NutchWax is designed to be inherently scaleable, but both the technical team at the National Library of the Netherlands (Koninklijke Bibliotheek - KB) and we have had problems getting it to work on more than one machine.

Searching

We need to perform larger-scale tests on searching since the production system will have over 2 Tb of archived data indexed - far greater than the 5 Gb we were able to test. When we migrate the existing data from the PANDAS system we will get an opportunity to test searching on the system before it goes live, and hence determine a suitable hardware framework for searching.

Viewing

The nature of the viewing tests (multiple people quickly hopping from link to link) means that they model a higher usage than actually used. One person quickly testing links might be the equivalent of three, four or even more people using the archive, reading the pages in some detail and hence requesting pages less frequently. Generally, load on all metrics was fairly low which implies we can deal with far more users than the tests measured. It is estimated that the system could support up to 50 concurrent users with similar hardware to the test system, although this number may be lower with a larger archive.

Index

We recommend that an area 20% of the size of the archive is reserved for the index.

Storage

We recommend that Network Attached Storage (NAS) is used to store both the index and the archive. Despite the overheads communicating information across the network, NAS performed better in our tests and is easily configured into a RAID array for improved data resilience.

References

- International Internet Preservation Consortium (IIPC) software : toolkit http://netpreserve.org/software/toolkit.php

- UK Web Archiving Consortium Project Overview http://info.webarchive.org.uk/

- Philip Beresford, Web Curator Tool, January 2007. Ariadne, Issue 50. http://www.ariadne.ac.uk/issue50/beresford/

- Wikipedia entry on Hadoop, retrieved 29 July 2007 http://en.wikipedia.org/wiki/Hadoop