Data Services for the Sciences: A Needs Assessment

Computational science and raw and derivative scientific data are increasingly important to the research enterprise of higher education institutions. Academic libraries are beginning to examine what the expansion of data-intensive e-science means to scholarly communication and information services, and some are reshaping their own programmes to support the digital curation needs of research staff. These changes in libraries may involve repurposing or leveraging existing services, and the development or acquisition of new skills, roles, and organisational structures [1].

Scientific research data management is a fluid and evolving endeavour, reflective of the high rate of change in the information technology landscape, increasing levels of multi-disciplinary research, complex data structures and linkages, advances in data visualisation and analysis, and new tools capable of generating or capturing massive amounts of data.

These factors can create a complex and challenging environment for managing data, and one in which libraries can have a significant positive role supporting e-science. A needs assessment can help to characterise scientists' research methods and data management practices, highlighting gaps and barriers [2], and thereby improve the odds for libraries to plan appropriately and effectively implement services in the local setting [3].

Methods

An initiative to conduct a science data services needs assessment was developed and approved in early 2009 at the University of Oregon. The initiative coincided with the hiring of a science data services librarian, and served as an initial project for the position. A researcher-centric approach to the development of services was a primary factor in using an assessment to shape services [4]. The goals of the project were to:

- define the information services needs of science research staff;

- inform the Libraries and other stakeholders of gaps in the current service structures; and

- identify research groups or staff who would be willing to participate in, and whose datasets would be good subjects for, pilot data curation projects.

The library took the lead role on the assessment, consulting with other stakeholders in its development and implementation. Campus Information Services provided input on questions regarding campus information technology infrastructure, and to avoid unnecessary overlap with other IT service activities focused on research staff. The Vice President for Research and other organisational units were advised of the project and were asked for referrals to potential project participants. These units provided valuable input in the selection of staff contacts. Librarian subject specialists also suggested staff who might be working with data and interested in participating. Librarians responsible for digital collections, records management, scholarly communications, and the institutional repository were involved in the development of the assessment questions and project plan.

The questions used in the assessment were developed through an iterative process. A literature and Web review located several useful resources and examples. These included the University of Minnesota Libraries' study of scientists' research behaviours [3], and a study by Henty, et al. on the data management practices of Australian researchers [5]. The Data Audit Framework (DAF - now called the Data Asset Framework) methodology was considered to provide the most comprehensive set of questions with a field-tested methodology and guidelines [6][7][8][9][10][11]. The stages outlined in the DAF methodology were also instructive, although we elected not to execute a process for identifying and classifying assets (DAF Stage 2), since the organisational structure of our departments and institutes are not conducive to that level of investigation. From the beginning it was recognised that recruitment of scientists was based as much on their willingness to participate as their responsibility for any specific class or type of research-generated data.

A first draft set of questions was based largely on the DAF, with input from the University's Campus Information Services, and the Electronic Records Archivist who had recently conducted interviews with social scientists in an investigation of their records-management practices [12]. The question set was further refined based on a suggested set of data interview questions from the Purdue University Libraries [13]. The Data Curation Profiles project by Purdue University Libraries and the University of Illinois, Urbana-Champaign [14], was in process at that time, and materials shared by those investigators also influenced the final set of interview questions. The final set of questions is included in the Appendix.

One of the recommendations of the DAF methodology is to create a business case to formalise the justification for and benefits, costs, and risks of the assessment [6]. The methodology materials included a useful model for the structure and content of this document. Familiarity with the risks and benefits in the local context was helpful for outreach to deans, chairs, and individual staff. Establishing common definitions for the topics and issues at hand improved the outreach process, and established realistic expectations for the outcomes of the assessment prior to the interviews.

We originally considered using an online survey in parallel with interviews. However, initial interviews quickly established that there would be an ongoing need for contextualising the questions. The conversational format of an interview enabled the author to define terminology and frame and clarify questions within the context of a particular scientist's research area, thereby providing a process that would yield more complete and accurate information.

The DAF group had developed an online tool which they were kind enough to share with the author. The structure of the tool was a useful starting point for the development of forms for data gathering. Because our assessment workflow was not as extensive as the DAF, we chose to create a set of Drupal-based forms for recording information into a MySQL database. Drupal provided a quick development environment for the forms and enabled us to tie data entry to a basic authentication scheme. During the course of the interview, the interviewer recorded the information under the scientist's account. This allowed the scientist to log in and review and add records about their data assets as a follow-up to the interviews.

Interviews were conducted throughout the 2009-2010 academic year, but most of them were completed between September and December 2009. Once interviews were done, the material was exported as a .csv file and then imported into Microsoft Excel for analysis and reporting via pivot tables and graphs.

To keep the project to a manageable scope, the assessment was limited to the natural sciences, which are within the College of Arts and Sciences at the University of Oregon. Research staff and staff from the following departments and groups were invited to participate: Biology, Chemistry, Computer and Information Science, Geological Sciences, Human Physiology, Physics, Psychology, the Center for Advanced Materials Characterization at Oregon (CAMCOR), the Institute for a Sustainable Environment, and the Museum of Natural and Cultural History. The Maps Librarian also provided information on Geographic Information System (GIS) datasets.

Presentations to the chairs of research centres and institutes and at an orientation for new staff seemed to be the most productive approach for recruiting participants. Library subject specialists also helped with outreach to interested staff, and each of the subject specialists accompanied the data librarian for an interview with one of the staff whose department they served.

Results

A total of 25 scientists were interviewed. The interviews generally took between 40 minutes and an hour, and were limited in most cases by the amount of time that could be scheduled with a scientist. In some cases the interview covered all the types of datasets that a lab or individual produced. In other instances we elected to focus on only one or two of several types of research-generated data because of time limitations. Despite invitations to use the online form to follow up and add other dataset information, only one scientist added another dataset description. Three logged in to review and update their post-interview records.

Numbers of participants, by group, are listed in the table below.

| Organisational Unit | Participants |

| Biology | 5 |

| Center for Advanced Materials Characterization at Oregon | 2 |

| Chemistry | 2 |

| Computer & Information Science | 1 |

| Geological Sciences | 5 |

| Human Physiology | 4 |

| Institute for a Sustainable Environment | 1 |

| Museum of Natural and Cultural History | 1 |

| Physics | 2 |

| Psychology | 2 |

Table 1: Participants by University of Oregon grouping

Participation included one or more staff in all of the targeted groups, and provided a broad enough cross-section of research and data management practices to meet our goals. In several cases scientists expressed the belief that most data management needs and problems were confined to 'big science' and massive datasets. However, during the course of the interviews, these scientists frequently noted datasets of relatively small scale that were critical to their research. As Heidorn has noted, much scholarly work is captured in smaller datasets that comprise the 'long tail' of scientific data [15]. Barriers and gaps in recording computational workflow and provenance were common features, even in datasets that initially seemed to have relatively simple origins.

Most of the staff noted the absence of domain repositories for their data and cursory checks indicated such data centres did not exist. Repositories in which scientists did participate included NSF-mandated deposits into the National Center for Airborne Laser Mapping for Light Detection and Ranging (LIDAR) data [16]; the Incorporated Research Institutions for Seismology consortium sponsored by the NSF for seismic data [17]; and the National Renewable Energy Laboratory's National Solar Radiation Database for solar energy data [18].

Some scholars expressed concern about publisher requirements with regard to data. In some cases, the journal requires that data must accompany the article, but it is published in a difficult-to-access format (i.e., as a table in a .pdf document rather than in a linked, tab-delimited file). Another comment was that 'even if it's published, the actual full data set will need to be made available some other way, since publishers don't have the space for the full data set.' An immediate outcome of the interview and awareness of repository options for one scientist was that they deposited the data into the Libraries' institutional repository to accompany an article at publication.

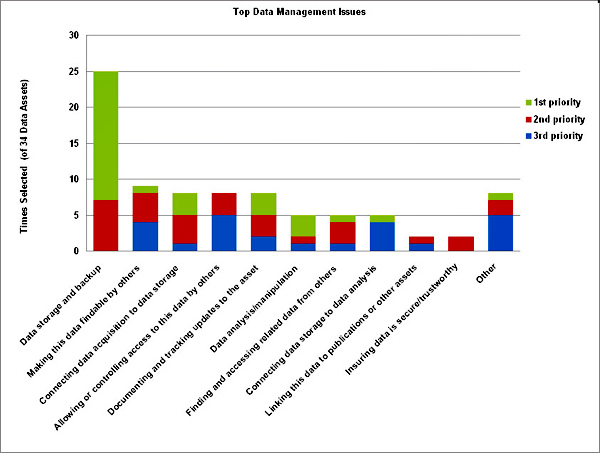

The final question of the interview provided scientists with a chance to select up to three top data management priorities from a list or create their own items. The top issues are shown in the graph below.

Figure 1: Data Management issues most frequently identified by survey respondents (this graph in alternative format )

Based on the selections and related comments in the interviews, the highest-ranking issues and gaps can be summarised as follows:

Infrastructure

Infrastructure (storage, backup). This situation is not substantially different from that found by Marcus, et al. [3] and Jones, et al. [7] A range of sub-optimal methods were used for data storage, ranging from desktop computers, to external hard drives, laptops, and optical drives, but frequently without secondary backup storage. Synchronisation of distributed files and version control were also noted as issues of significant concern. Storage is a baseline requirement for other curation activities, and a variety of stakeholders at the University are now developing more centralised and redundant options for researchers.

Despite the gaps in infrastructure and lack of domain repositories at this time, most researchers believed that their data should be preserved indefinitely (30 of 34 data assets).

Data Management Tools

Need for baseline and advanced data management tools and services in the research setting (annotation/metadata, access and discovery, version control, workflow, provenance). Scientists were most interested in services that could help them manage data at or very near the beginning of the data life cycle.

Research staff clearly desire help with managing data as close to the point of capture/generation as possible. For instance, these comments elaborated selections in the priorities list: 'General organization of the data and keeping things coherent across the lab.' 'Transferring data from a variety of instruments (some quite old) into one central database.' 'How to store data in an organized manner for other students in the lab to access (and add to).' 'Individual students may develop their own processes for analyzing data sets, and the issue is that if they use different methods, how should the data be backed up and the method(s) recorded.' 'Capturing metadata: sample description, project name, etc.... system to capture and store... and make it discoverable for later retrieval.'

This was one of the most notable things we observed, and it reinforced the role that libraries can play in data curation. By engaging in services and projects that target data acquisition and link it to fundamental curation practices, libraries can improve the usability and longevity of important data assets.

In their role as research managers and primary investigators, scientists also want their teams to acquire the necessary skills to manage data. Jones, et al. noted this in their report as well [7]. The Libraries plan to offer workshops and online resources specifically directed toward graduate students and other researchers in the natural sciences in the coming year.

Formalised data policies and best practices documentation or guidance was largely absent across the interview group. Follow-up conversations indicated that the interviews did prompt some staff to institute basic record-keeping and file management best practices in their research labs. Materials such as the UK Data Archive's best practices guide [19] are helpful, and like other libraries we will continue to expand online resources, training, and support services. These recommendations will be more useful and uniformly implemented if they are accompanied by tools, infrastructure, and support mechanisms that enable, facilitate, and reinforce policies and best practices. Therefore, it is the author's opinion that libraries must play a role in instigating or developing these related facets of data management.

Publication of Datasets

Staff expressed some interest/needs related to publishing datasets and deposit in repositories, but in most cases this was subsumed by the issues associated with managing the influx of data from current research.

Discussion

One of the challenges in recruiting participants was to communicate the need for investigating new services at a time when the library is facing budget cutbacks and limitations. In one case, a scientist voiced concerns over the erosion in serials subscriptions juxtaposed against the investment of resources in a new role in data curation. This sentiment was rare, but does highlight the need for greater transparency on issues around scholarly communications and library budgets.

More common issues in the assessment itself were that of conveying definitions for terminology (i.e., metadata, curation), and establishing realistic expectations for what services the library might be able to provide in the near and long term.

The process of meeting with staff, collecting information, and acting as a sounding board will, it is hoped, facilitate relationships and trust that are critical to developing services and collaboration.

Other benefits have been realised from the assessment:

- increased awareness of data management issues among research staff and stakeholders in the campus organisation

- a number of requests for training for graduate students and lab personnel

- increased communication between research staff and the Libraries about data management issues and data curation

Information technology support for e-science and cyberinfrastructure at the University is highly dependent on grant support and varies by lab, research institute, and department. A decentralised information technology structure is an issue that was frequently commented on by scientists. Appropriating the necessary resources and expertise for infrastructure enhancements will be challenging. Despite budgetary and technology resource constraints, partnerships and collaborations based on trusted relationships can begin to address some of these gaps. Exemplars of coordination within academic institutions, such as the E-Science Institute at the University of Washington [20] and those outlined by Soehner, et al [21] are gaining support among staff.

With the exceptions noted above regarding mandated data deposit, scientists were not working under strict data management plans. The prospect of granting agencies implementing data management requirements, and the competitive advantages of such plans in proposals were noted by staff as incentives for improved data curation.

Although not a subject of this assessment, tenure-related incentives would in all likelihood also improve data curation practices by domain scientists [15]. Researcher concerns about data sharing are summarised by Martinez-Uribe and Macdonald [22], and the Libraries are not attempting to take a lead role in promoting open access science. However, as is being seen in the nanoHUB site [23], structuring technology to facilitate discovery, usage, and citation can be a powerful factor in rewarding those who want to publish digital materials via pathways not traditionally associated with scholarly communication.

The final goal of the assessment was to identify researchers as partners for pilot data curation projects. Two projects have been initiated with research staff. Both of these collaborations are with service centres which generate analytical datasets for multiple labs in the biology and chemistry departments. The objectives of the pilot projects are a direct response to issues defined by the assessment. One of the most significant challenges will be to incorporate 'curation-friendly' functionality wherever possible in the outcomes of the projects. The library literature generally refers to curation as '... active management and appraisal of digital information over its entire life cycle' [24] and stresses involvement early in the data life cycle [22]. To date, however, relatively few library-sponsored projects and tools seem to be directed toward data management at the point of data generation/collection. The relative lack of exemplars that support curation in this part of the data life cycle may be a significant challenge.

Conclusion

The needs assessment has proven to be a useful tool for investigating and characterising the data curation issues of research scientists. The tested methods of the DAF and other assessment tools were critical to our ability to implement an assessment in a timely and successful manner. The assessment generated actionable information, and the activities also set a foundation for scientists, librarians, and other stakeholders for ongoing conversations and collaboration. The Libraries are looking forward to the next steps in support of data curation, and we hope that the development of data management guidelines, methods, tools, and training will provide templates for services by the Libraries that can be extended to other science research groups on campus.

References

- Gold A. Data Curation and Libraries: Short-Term Developments, Long-Term Prospects. 2010

http://digitalcommons.calpoly.edu/cgi/viewcontent.cgi?article=1027&context=lib_dean - Watkins R, Leigh D, Platt W, Kaufman R. Needs Assessment - A Digest, Review, and Comparison of Needs Assessment Literature. Performance Improvement. 1998 Sep;37(7):40-53.

- Marcus C, Ball S, Delserone L, Hribar A, Loftus W. Understanding Research Behaviors, Information Resources, and Service Needs of Scientists and Graduate Students: A Study by the University of Minnesota Libraries. University of Minnesota Libraries; 2007 http://lib.umn.edu/about/scieval

- Choudhury GSC. Case Study in Data Curation at Johns Hopkins University. Library Trends. 2008 (cited 10 April 2009);57(2):211-220

http://0-muse.jhu.edu.janus.uoregon.edu/journals/library_trends/v057/57.2.choudhury.html - Henty M. Developing the Capability and Skills to Support eResearch. April 2008, Ariadne, Issue 55 (cited 26 July 2010)

http://www.ariadne.ac.uk/issue55/henty/ - Jones S, Ross S, Ruusalepp R. Data Audit Framework Methodology. University of Glasgow: Humanities Advanced Technology and information Institute (HATII), University of Glasgow; 2009.

http://www.data-audit.eu/DAF_Methodology.pdf - Jones S, Ball A, Ekmekcioglu Ç. The Data Audit Framework: A First Step in the Data Management Challenge. International Journal of Digital Curation. 2008 Dec 2 (cited 26 July 2010);3(2):112-120.

http://www.ijdc.net/index.php/ijdc/article/view/91/109 - Ekmekcioglu Ç, Rice R. Edinburgh Data Audit Implementation Project: Final Report. 2009.

http://ie-repository.jisc.ac.uk/283/1/edinburghDAFfinalreport_version2.pdf - Jerrome N, Breeze J. Imperial College Data Audit Framework Implementation: Final Report. 2009 (cited 26 July 2010)

http://ie-repository.jisc.ac.uk/307/ - Gibbs H. Southampton Data Survey: our experience and lessons learned. JISC; 2009.

http://www.disc-uk.org/docs/SouthamptonDAF.pdf - Martinez-Uribe L. Using the Data Audit Framework: An Oxford Case Study. EDINA, Edinburgh University; 2009.

http://www.disc-uk.org/docs/DAF-Oxford.pdf - O'Meara E. Developing a Recordkeeping Framework for Social Scientists Conducting Data-Intensive Research. SAA Campus Case Studies – CASE 5. Chicago, IL: Society of American Archivists; 2008

http://www.archivists.org/publications/epubs/CampusCaseStudies/casestudies/Case5-Omeara-Final.pdf - Witt M, Carlson JR. Conducting a Data Interview. December 2007 http://docs.lib.purdue.edu/lib_research/81/

- Witt M, Carlson J, Brandt DS, Cragin MH. Constructing Data Curation Profiles. International Journal of Digital Curation. 2009 Dec 7 (cited 27 July 2010);4(3):93-103

http://www.ijdc.net/index.php/ijdc/article/viewFile/137/165 - Heidorn PB. Shedding Light on the Dark Data in the Long Tail of Science. Library Trends. 2008 (cited 2 August 2010);57(2):280-299

http://muse.jhu.edu/journals/lib/summary/v057/57.2.heidorn.html - The National Center for Airborne Laser Mapping (NCALM). The National Center for Airborne Laser Mapping (NCALM). (cited 2 August 2010)

http://www.ncalm.org/home.html - IRIS - Incorporated Research Institutions for Seismology. (cited 2 August 2010) http://www.iris.edu/hq/

- NREL: Solar Research Home Page. (cited 2 August 2010) http://www.nrel.gov/solar/

- Van den Eynden V, Corti L, Woollard M, Bishop L. Managing and Sharing Data: A Best Practice Guide for Researchers. University of Essex; 2009.

http://www.data-archive.ac.uk/media/2894/managingsharing.pdf - University of Washington eScience Institute. (cited 3 August 2010) http://escience.washington.edu/

- Soehner C, Steeves C, Ward J. e-Science and data support services: a survey of ARL members. In: International Association of Scientific and Technological University Libraries, 31st Annual Conference. Purdue, IN: Purdue University Libraries; 2010 (cited 3 August 2010)

ttp://docs.lib.purdue.edu/iatul2010/conf/day3/1 - Martinez-Uribe L, Macdonald S. User Engagement in Research Data Curation. In: Research and Advanced Technology for Digital Libraries. 2009 (cited 3 August 2010). p. 309-314

http://dx.doi.org/10.1007/978-3-642-04346-8_30 - Haley B, Klimeck G, Luisier M, Vasileska D, Paul A, Shivarajapura S, et al. Computational nanoelectronics research and education at nanoHUB.org. Journal of Computational Electronics. 1 June 2009 (cited 3 August 2010);8(2):124-131.

http://dx.doi.org/10.1007/s10825-009-0273-3 - Pennock M. Digital curation: a life-cycle approach to managing and preserving usable digital information. Library and Archives Journal. 2007;1.

http://www.ukoln.ac.uk/ukoln/staff/m.pennock/publications/docs/lib-arch_curation.pdf

Appendix – Interview Questions

These questions were adapted with permission from the following sources: Data Audit Framework Methodology [6], Conducting a Data Interview [13], and Constructing Data Curation Profiles [14]. The report, Investigating Data Management Practices in Australian Universities [5] also provided some helpful ideas.

I. General background

A. Who "owns" the data (is responsible for the intellectual content of the asset)?

B. IT manager/person responsible for the asset:

C. Department(s) where this data is generated/collected (drop-down list):

D. Institute/centre(s) where this data is generated/collected (drop-down list)

II. Research Context

A. Subject and purpose of the data being collected:

B. Methods of data collection/generation:

III. Data form & format

A. File format(s), including transformation from raw to processed/final use data, if applicable.

B. What form or structure is the data in?:

C. Is there metadata or other descriptive information for the asset?:

D. How large is the dataset? (approximate file size and number of records):

E. What is its rate of growth or update frequency?:

IV. Data storage

A. Current Location:

B. How is the data backed up?

C. Are there related data repositories?

D. What is the expected lifespan of the dataset?

E. Are there grant requirements for data archiving, access, etc?

V. Access and Knowledge Transfer

A. Does the dataset contain any sensitive information (e.g., human subjects)?

B. How could the data be used, reused, and repurposed?

C. If shared with others, how should the data be made accessible (and under what conditions)?

VI. More Details - Top Issues & Questions

In this section of three identical questions, staff chose the top, second, and third priority issues for the above data asset, selecting from the following drop-down list. They are also asked to provide comments to describe each issue more fully.

Choose the top (second, third) data management issue from the following list:

None -

Connecting data acquisition to data storage (network and/or workflows)

Connecting data storage to data analysis (network and/or workflows)

Data storage and backup

Data analysis/manipulation

Finding and accessing related data from others

Insuring data is secure/trustworthy

Linking this data to publications or other assets

Making this data findable by others

Allowing or controlling access to this data by others

Documenting and tracking updates to the asset

Metadata

No issues need to be addressed

Other (describe below)

Details / comments: