Collaborations Workshop 2012: Software, Sharing and Collaboration in Oxford

Simon Choppin reports on a two-day software workshop held at The Queen’s College, Oxford over 21 - 22 March 2012.

On the 21 and 22 March 2012 I attended a workshop which was unlike the stolid conferences I was used to. In the space of two sunny days I found I had spoken to more people and learnt more about them than I usually managed in an entire week. Presentations were short and focused, discussions were varied and fascinating, and the relaxed, open format was very effective in bringing people from differing disciplines together to consider a common theme. In this case the theme was software, and whether you used or developed it, there was plenty of food for thought.

The Conference with a Difference

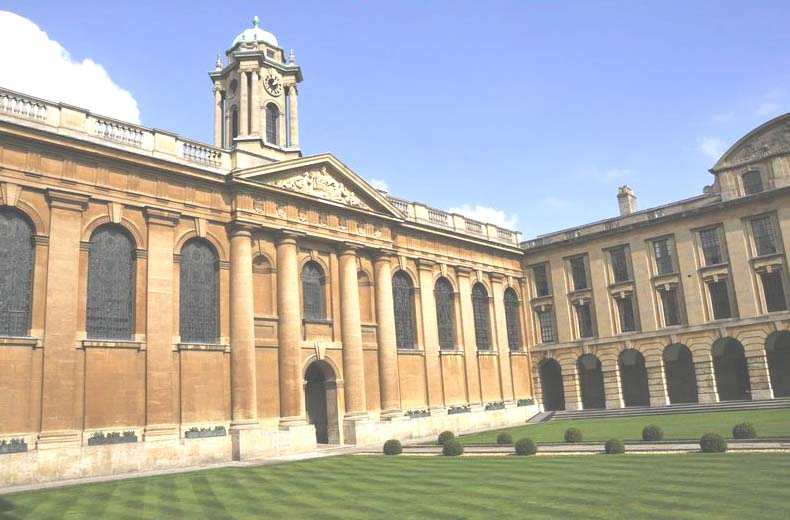

The 2012 Collaborations Workshop [1], run by the Software Sustainability Institute, took place amidst those dreaming spires of Oxford at The Queen’s College, and I spent my time there as an agent in unfamiliar territory. In October of last year I had been selected by the Institute as an 'Agent' [2], to represent them at conferences and report back with the 'state of play' of software in that area. The Collaboration Workshop was the official event of the Institute and there were nine other agents in attendance.

As scientists and engineers at a software developer’s conference, we may have seemed out of place, but were at no disadvantage. The organisers had acknowledged the difference between 'users' and 'makers' of software and asked us to identify ourselves as one or the other when signing up to attend (we were later to receive badges of a distinguishing colour so there was no hiding to which camp we belonged). The workshop was there to provide both groups with the opportunity to discuss the issues which affected us all and to find possible solutions.

The elegant quad of The Queen's College, Oxford

The Collaborations Workshop was a very different event to what I was accustomed. In my brief career I have attended a number of conferences which stuck to a strict format to keep harassed academics firmly within their comfort zone. At these events delegates are asked to perform a single, well-rehearsed presentation at, and for, a specific time. When you’re not presenting or listening to others present, there are opportunities for ‘networking’ which are embraced by some and avoided by others. Within five minutes of arriving at the Collaborations Workshop I knew things were going to be different.

As I arrived at the front desk I was greeted by neat lines of name badges guarded by a smiling attendant. After ticking off my name and taking the conference pack I took a seat and rifled through the pack for a programme. After a minute of searching my sweaty palms were struggling to leaf through the materials within. No programme!? I was incredulous, how was the workshop supposed to work without a rigid format? The schedule is the framework to plan your time around, a helping hand to allow you to wander vacantly between sessions as if on rails. I asked Simon Hettrick (Conference Chair) where I could find a paper copy so I could clutch it tightly for the next couple of days. He cheerily told me that it’s changed so often that there’s no point in printing one, in fact he had made yet more amendments less than an hour previously. A pang of fear: I was due to give a talk on the second day; was it possible that had changed too? I had scheduled 47 minutes extra rehearsal time that very evening. Without it my time in the spotlight would be a pale imitation of what I intended. 'Yes, you’re on this afternoon!', Simon told me. Devastation. If I was going to get any kind of grasp on this crazy workshop I had wandered in on, I needed backup. Most of the other participants seemed to be tapping away at laptops or prodding tablets, all connected, all tuned in. They probably all knew exactly when I was supposed to be presenting. I glanced at my pencil and pad and felt woefully ill-equipped. Thankfully my laptop was in my room. Once connected to the Internet and the resources the Software Institute had put online, my heart rate resumed a steady rhythm and I surveyed the days ahead.

The collaborative ideas session, held in the College’s dining room

The format of the workshop was very informal and was deliberately designed to encourage debate, discussion and interaction. Prior to the conference, an e-mail group was set up so that everyone attending the conference could reach everyone else very easily. This method was used to share ideas and the findings of discussions which went on over the two days, and it worked very well.

At the other conferences I had attended, emphasis was on focus, with directed presentations written to discuss a single topic in detail. The equivalents here were 'lightning talks': three minutes of talking, one slide, no questions, they were the presentation equivalent of a tweet. The procedure was: get your message across, engage with an audience and if you interest someone you can discuss things in more detail during a break. It was a very effective way of communicating. After I’d seen all the lightning talks I felt like I knew at least a little about what everyone in attendance did, their background and their interests. Perhaps this says something about me, but because the emphasis was on simplicity, I felt much more able to approach people about areas of which I knew little. I might not be able to discuss the intricacies of their work with them, but at a more general level, we could discuss the elements that we had in common. To demonstrate this, the first session was an exercise in finding common ground. We were asked to talk to other members of our randomly assigned group and come up with a project on which to work together. As we’d never met, there was an initial period of explaining our work, but in a way in which we could quickly find commonality. It meant you had to be very receptive to ideas from the other person and also think about your own work very differently. There was no room to focus on the finer points and problems in your research, only the bigger picture. Each of these discussions had to yield a simple idea for collaboration. We managed to jot down a few ideas which were put on a board for others to read and judge, being awarded a sticker of approval from anyone who liked it, with the most popular idea being awarded a prize at the end of the two days (to save you the suspense, I regret to report I failed to trouble the judges).

One of the many breakout sessions held over the two days

In addition to the collaboration exercise and lightning talks, four separate sessions were held over the two days for 'breakout' discussions. Around six topics were proposed in each session and, in the spirit of the workshop, each topic was suggested by delegates. You chose a favourite topic for each session, so attended five breakout sessions in total. The breakout talks themselves were guided discussions from which you had to distil the five most important points. Quite often this would be quite straightforward: there was a clear theme and the gist of the matter came to light fairly quickly. However in others it proved difficult to pin the key concepts down. Examples of breakout talks conducted over the two days were:

- How to operate an open source software project effectively

- Building research and communication networks across disciplines

- What 'Grand Challenges' can be addressed by collaboration with software developers?

- How to blog and run a blog

- Successful collaboration with computer scientists

How to Write Code for Everyone - Not Just Yourself

The first breakout session I attended was 'How to write code for everyone and not just yourself'. I was particularly interested in getting some insight from professional software developers into this subject. I had very little experience of proper coding etiquette. In a professional context I was able to stumble along in a couple of programming languages but was starkly aware of the gaping inadequacies that must exist in everything I’d written. If I wanted to put some simple programs online (as I was planning) this could teach me some important lessons. The discussion was lively and led by a few people who had experience in research and industry. What I found interesting about the discussion was the manner in which subject was tackled, that is, in a very academic manner. Firstly, who is 'everyone'? We decided that 'everyone' is anyone with sufficient experience to be able to use your code. Secondly, why should we be trying to write code for everyone? A very good point and something I’d not considered. The discussion swung around to the motivating factors of typical software developers, how they’re funded, why they write code and how they write it. Badly commented, cryptic code is much quicker to write than finely crafted syntax, and if researchers see programs as tools to create publications, why would they spend any more time than necessary? On the other hand, software programmes are the craft of the developer, in academia there are countless useful applications mouldering on hard drives, never to see the light of day, the funding dried up, the project completed. This software has a real cost (and a real value) and without more incentives to release software into the public domain, much more software will be consigned to the virtual scrapheap. So what can be done? I think it was beyond the remit of the breakout session to provide definite solutions, but the group had plenty of good ideas. Journals have a role in encouraging good practice by accepting software-based submissions. Funding bodies need to allow for proper software development, and institutions should recognise the role of the software developer within a university structure. With these measures in place, researchers and academics who write software might be encouraged to create code for everyone, not just themselves.

I acted as 'scribe' through this breakout session which meant it was my role to record and distil the main points from the discussion as it went on around me. I was quite happy in this role and thought I managed to capture the crux of the argument. However, in the style of this workshop, participation and discussion was encouraged at every opportunity. I soon found out that it was also my role to give a short (four-minute) presentation to those attending. I had to get across the five main points, and the problems and solutions we had discussed. I’m not one for off-the-cuff presentations, favouring instead a robust foundation of endless rehearsal. However, the workshop had a very relaxed atmosphere and I was happy to improvise in front of the crowd. This was quite significant for me, I’m an engineer and am happy to talk about impact dynamics or high-speed video techniques with little warning, but standing in front of a room of professional software developers and telling them how to write code effectively is a different matter. It surprised me that I felt happy to share our group’s findings and answer questions from others.

Credit Where Credit's Due

After all the groups had fed back their findings, it was time for what I can only assume was a typical Oxford collegiate dinner, fine food and waiter service. The discussions continued over food and everyone returned sated in mind and body. Breakout sessions continued after lunch and because I’d been so interested in the first, I was able to attend another which carried on a similar discussion. I’d had my eyes opened to the difficulties faced by software developers within academia. How do you attribute credit to a piece of software? In the traditional academic model, recognition is given through papers. A paper is a discrete piece of research, frozen in time, and credit is attributed definitively to its authors. While this model varies between disciplines and is subject to ‘gaming’, there is a clear path for researchers to take who want to progress in their career. Some argued that when you write a piece of software, you can publish a corresponding paper. However this isn’t always appropriate and there may be no obvious paper to write. A piece of software may be continually under development, with different people adding functionality at different times. Different additions may take different amounts of effort to complete. Given this very different model, who gets the credit? Does that credit change over time?

There were lots of very interesting questions raised about software in an academic context. What was clear was that some form of recognition is necessary so that software developers can legitimately and effectively choose a career in academia. Some journals are responding to this need, the Journal of Machine Learning Research (JMLR) [3] now accepts software-based submissions, stating on its Web site:

'To support the open source movement JMLR is proud to announce a new track. The aim of this special section is to provide, in parallel to theoretical advances in machine learning, a venue for collection and dissemination of open source software. Furthermore, we believe that a resource of peer reviewed software accompanied by short articles would be highly valuable to the machine learning community in general, helping to build a common repository of machine learning software.'

I also learnt via the shared e-mail group that there is a science code manifesto you can sign [4]:

'Software is a cornerstone of science. Without software, twenty-first century science would be impossible. Without better software, science cannot progress.'

But the culture and institutions of science have not yet adjusted to this reality. We need to reform them to address this challenge, by adopting these five principles:

- Code: All source code written specifically to process data for a published paper must be available to the reviewers and readers of the paper.

- Copyright: The copyright ownership and licence of any released source code must be clearly stated.

- Citation: Researchers who use or adapt science source code in their research must credit the code’s creators in resulting publications.

- Credit: Software contributions must be included in systems of scientific assessment, credit, and recognition.

- Curation: Source code must remain available, linked to related materials, for the useful lifetime of the publication.’

Change is happening.

My Turn in the Spotlight

Next up, my lightning talk, I’d managed to check through my notes in a coffee break, which provided a framework around which I could babble for three minutes. I adopted the ‘stomp-and-wave’ approach, in the belief that gesticulation and frantic wandering about the stage bolsters the content of any presentation. I managed to get my point across, discuss my work in general terms and all tightly within the three-minute deadline, spontaneity can be good. I was learning.

That evening there was a drinks reception followed by a dinner in the main hall which was as excellent as the other meal I’d had. There was plenty of opportunity to talk to others and I found that there were plenty of people interested in my work which was encouraging. After a 5 am start, my battery was running on empty, so as the others left to find ‘the most touristy pub in Oxford’, I blearily made my apologies and navigated the ancient corridors to bed.

Conference dinner

Collaboration and Communication

The second day followed a similar format with two more breakout sessions and further opportunities for lightning talks, coffee and stimulating discussion. The first breakout session I attended was a meeting to bring together the Software Sustainability Institute’s 'Agents' (of which I was one) and the National Grid Service’s ‘Campus Champions’ [5]. In both cases the role of these individuals is to get the message and the services of their respective organisations across to the people who might want to use them. Given their similar remits, it was thought that efforts could be co-ordinated and resources pooled to make both groups more effective. The findings of this session were interesting. How do you disseminate useful information most effectively? E-mail? Twitter? A blog? It’s important that everyone is able to access the information but also that its transmission doesn’t become a burden. It was decided that no matter what the method of communication, value had to remain high, there had to be a guarantee that every item of information was of use and relevance. How do you do this? Restrict access. This may seem draconian at a conference touting new software, sharing and open access, but if only one or two people can contact the group, the quality of communication can remain consistent and high.

Ivory Towers 2.0

The last breakout session I attended concerned the use of social media and Web 2.0 to reach the public and other researchers. It was great to hear how the other delegates discussed their research with others and disseminated it to the public. As a Sports Engineer, I’m quite fortunate that my research area has an obvious public interest, I have quite a lot of experience in public engagement and I maintain a blog [6] with others in the research centre. So are social media an effective tool? Blogs and Twitter are a great way to engage with an audience keen to participate. They have to seek out your blog or subscribe to your Twitter feed. For the less engaged, it’s important to find alternative hooks or ways for them to understand and enjoy the work you do. The chair of the session Phil Fowler used the fascinating example of a game called 'foldit' [7]. The game requires the player to perform increasingly complex protein-folding operations and compete for high scores in an online community. Apparently no specific scientific expertise is necessary to play the game, but the very best players can (incredibly) occasionally outperform the best computer systems designed to perform this specific task. As an example it’s quite involved and this level of investment won’t be for everyone, but it goes to show that if you think your work has no public interest or relevance, you may not be thinking hard enough.

The delegates of the Collaborations Workshop 2012

Conclusion

The workshop drew to a close with a final rounding up of the main things we’d distilled from it: incentives for developers, career options in academia and more effective ways for researchers to collaborate online. The workshop had totalled 60 delegates, 23 discussion sessions, 23 lightning talks and 38 possible collaborations. After a long couple of days at the workshop, I left feeling energised. I’d made some great contacts, managed to shed some old habits in making presentations, and learnt a lot about software and sharing ideas. I left the gates of The Queen’s College enthusiastic about the year ahead.

References

- The Collaborations Workshop http://www.software.ac.uk/cw12

- Software Sustainability Institute’s Agents Network http://software.ac.uk/agents

- Journal of machine learning research http://jmlr.csail.mit.edu/

- Science code manifesto http://sciencecodemanifesto.org

- National Grid Service’s Campus Champions Network http://www.ngs.ac.uk/campus-champions/champions

- Engineering Sport Blog: The Centre for Sports Engineering Research http://engineeringsport.co.uk

- Solve Puzzles for Science http://fold.it

Author Details

Simon Choppin

Research Engineer

Sheffield Hallam University

Email: s.choppin@shu.ac.uk

Web site: http://engineeringsport.co.uk/

Simon Choppin is a sports engineering researcher at the Centre for Sports Engineering Research at Sheffield Hallam University, where he specialises in impact dynamics, high-speed video and data modelling. His PhD at Sheffield University investigated tennis racket dynamics and involved working with the International Tennis Federation.

In 2006 he developed a novel 3D method of racket and ball tracking, which was used at a Wimbledon qualifying tournament. His subsequent paper, presented at the Tennis Science and Technology Conference 2007, was awarded the Howard Brody Award for outstanding academic contribution.

Simon is also a keen science communicator and has taken part in several high-profile events promoting the image of science and technology. He was awarded a British Science Association Media Fellowship in 2009 and worked with the Guardian newspaper for four weeks. As well as pursuing his research interests he works on a large project employing neural network and genetic algorithm optimisation techniques. He edits and contributes to the CSER blog, Engineering Sport.