Online Information 2012

Marieke Guy reports on the largest gathering of information professionals in Europe.

Online Information [1] is an interesting conference as it brings together information professionals from both the public and the private sector. The opportunity to share experiences from these differing perspectives doesn’t happen that often and brings real benefits, such as highly productive networking. This year’s Online Information, held between 20 - 21 November, felt like a slightly different event to previous years. The conference had condensed down to 2 days from 3, dropped its exhibition and free workshops and found a new home at the Victoria Park Plaza Hotel, London. The changes resulted in a leaner, slicker, more focussed affair with even more delegates than last year. Presentations were clustered around 5 key topics which ran in three parallel tracks:

- the multi-platform, a multi-device world;

- social collaboration;

- big data;

- search;

- and new frontiers in information management.

This event report will cherry-pick the most interesting and engaging talks I attended.

Cory Doctorow: Opening Keynote to Day One

It’s always refreshing to see Cory Doctorow talk. Cory is the editor of Boing Boing [2] blog, and contributes to many UK papers, he rarely uses slides and talks at rapid pace in his Canadian drawl. His keynote considered the idea that there is no copyright policy; there is only Internet policy - and following on from this, there is no Internet policy; there is only policy.

Doctorow explained that copyright as it is today serves way too narrow a purpose and uses an outmoded business model. Its use is primarily a regulatory framework for the entertainment industry and so it is failing digital content by use of the ‘copy rule’ as deciding factor. Doctorow pointed out that there is little difference between streaming and downloading, the only way a file can render on your computer is for a copy to be made. There is a real need for the entertainment industry to come up with a new rule; the Internet and computers are vital to everything we do, Internet use enhances every aspect of our lives. Research looking at communities in socially deprived areas found those with Internet access achieved better grades at school, were more civically engaged, more likely to vote, had better jobs and better health outcomes.

Doctorow’s talk was filled with thought-provoking examples of failing policy: When Amazon discovered that it had been selling the digital version of the novel 1984 without the full rights to it, the company reclaimed all copies from customers’ Kindles – they took back the book, something no traditional seller of books could ever do with print copies. In a school in the US, MacBooks were given out to all pupils, however the operating system had been gimmicked and the camera was configured to be activated remotely. The aim was to record anti-social behaviour and aid with tracking discipline cases. The school was in effect spying on its students. Similar activities were carried out by a laptop leasing company, the aim being to recover computers if payment stopped.

The entertainment industry has now called for easy censorship of networked material through ‘notice and take down’. It is demanding judicial review of all online content. Viacom has recently taken a case out against YouTube claiming YouTube is acting illegally by failing to review every minute of video before it goes live. YouTube currently receives 72 hours of uploaded video every minute of the day; there are not enough copyright lawyer hours remaining between now and the end of the time to examine every piece of uploaded video.

In the UK we have the Digital Economy Act [3], which applies a ‘three strikes and you’re out’ law against copyright infringement. It is clear that copyright is not fit for purpose for regulating the Internet nor for regulating the entertainment industry, either. Copyright law must be changed; it must not be used to rule the Internet. The Internet is so much more than the copyright industry.

The Power of Social: Creating Value through Collaboration

Social Business in a world of abundant real-time data

Dachis Group MD for Europe, Lee Bryant, gave a talk that considered the abundance of data out there but asked what will happen when we start to take these data back into our narratives? He explained that there is no point in collecting data unless you can translate them into action and that algorithms alone are not enough to analyse big data, you need passionate individuals to do this. Small data are what turns an impersonal space into something personal. Companies need better tools and filters so they can understand their data better. There is also a need for social analytics to be applied internally as well as externally. Bryant advocated the creation of performance dashboards produced in the open because it is ‘no longer about sharing but about working in the open’.

Transforming How Business Is Done

Alpesh Doshi, Founder and Principal, Fintricity, UK began by asking for a show of hands for different work areas: the audience consisted predominantly of public sector workers, yet the session was about business. Doshi continued Lee Bryant’s argument by saying that while currently social media were external, they could be used to transform internal business, this was in effect social business. Social business is about bringing business transformation, operational transformation and marketing transformation and will be engaged, transparent and agile. Failures are key, which is a new thing for businesses, we move on and learn from them. Doshi explained that big data was a route to small data - finding that nugget that will make a difference.

Special Collections + Augmented Reality = Innovations in Learning

Jackie Carter, Senior Manager, Learning and Teaching and Social Science, University of Manchester, explained how MIMAS had reacted to the 2011 Horizon report that predicted that augmented reality (AR) will bring ‘new expectations regarding access to information and new opportunities for learning.’ MIMAS had put together a mixed team and began the SCARLET Project [4] exploring how AR can be used to improve the student experience. SCARLET began by pioneering use of AR with unique and rare materials from the John Rylands Library, University of Manchester. The SCARLET approach has since been applied at the University of Sussex and UCA. Lecturers have been able to use the AR application in a matter of weeks. Carter carried out a demo using the Junaio open source software [5]. She pointed the scanner at the QR code and then viewed one of the Dante (14th Century manuscript) images. This allows layers to appear on the image; the digital content is glued on top. Users can see a translation of the inscription in modern Greek.

MIMAS is now looking for follow-on funding to allow it to embed the lessons learnt into its learning and teaching resources. Carter’s key points to take away were that when using AR it is really important to get the right mix of people in the team. Also use of AR should be contextual, rather than an add-on. Much AR in the past has been location-based, whereas the SCARLET Project has focussed much more on embedding in learning and teaching - something students could not get in any other way.

Tony Hirst of the OU speaking on Data Liberation: "Opening up Data by Hook or by Crook"

(Photo courtesy of Guus van den Brekel, UMCG.)

Making Sense of Big Data and Working in the Cloud

Welcome to the University of Google

Andy Tattersall, information specialist in the School of Health and Related Research (ScHARR) Library, University of Sheffield began his presentation with a proviso: The University of Sheffield hadn’t been renamed the University of Google and he wasn’t even that big a Google fan. He explained that he had the ‘same relationship with Google as I have with the police: underlying distrust but they are the first place I go when I need something”. In 2011 the University of Sheffield made the paradigm shift to the cloud-based workplace. The decision was made because the existing student email system wasn’t fit for purpose, storage was inadequate and staff were using external services anyway. Tattersall explained that lots of staff and students were sharing data on Github, Flickr, Dropbox, Slideshare, Scribd etc., but the University IT services weren’t providing a mechanism to support this activity, they were just stepping away.

The Sheffield approach was carried out in two phases: first students, then staff. All services had been rebranded with University of Sheffield logo and training sessions held. Tattersall explained that the result was that individuals had more autonomy; it had made it a lot easier to create Web sites on the fly. The rollout itself was successful because it involved significant amounts of training and the use of ‘Google champions’- advocates for Google from different departments. In the question-and-answer session, Chris Sexton, Director of Corporate Information and Computing Services at Sheffield explained that the security and sustainability concerns that they were always asked about were unfounded. Sheffield had carried out a risk analysis prior to the move and Google data storage was just as secure as any other options. The Google apps education bundle [6] (Gmail, Google Calendar, Drive etc.) that the University has bought were under contract, so while some experimental consumer apps could be withdrawn, the main ones could not without compensation.

Making Sense of Big Data

Mark Whitehorn, Chair of Analytics, School of Computing, University of Dundee, Scotland gave a very different overview of big data to those I’ve seen before. He explained that data has always existed in two flavours: 1) stuff that fits into relational databases (rows and columns) - tabular data, and 2) everything else: images, music, word documents, sensor data, web logs, tweets. If you can’t handle your data in a database then it’s big data; but big data do not necessarily have to be huge in volume. You can put big data into a table but you probably won’t want to; each class of big data usually requires a hand-crafted solution.

Whitehorn explained that big data have been around for over 30 years, since computers first came about, but that we’ve focussed on relational databases because they are so much easier. Two factors have changed recently: the rise of machines and an increase of computational power. Whitehorn gave the example of the Human Genome Project completed in 2003. Scientists haven’t done anything with the knowledge yet, this is because there are 20,000 - 25,000 genes in the human genome, but they all have different proteins within them. These data are big and more complex than tabular data and are going to need to be analysed. Whitehorn noted that for most organisations out there it is it probably easier to buy algorithms from companies (for example to mine your Twitter data) than it is to develop them. He suggested that we choose our battles and look for areas where real competitive advantage can be gained. Sometimes it is in the garbage data where this can actually be achieved. For example, Microsoft spent millions on developing a search spell-checker, Google just looked at misspellings and where people went afterwards.

Many of these practices are the work of a new breed of scientist: the data scientist. Dundee are rolling out the first data science MSc course in UK from next year [7].

Gerd Leonhard: Opening Keynote to Day Two

Gerd Leonhard is the author of five books and 30,000 tweets and is unsure which matters more these days. He began by explaining that he was a futurist, so deal with foresights, not predictions [8]. During his talk ‘The Future of the Internet: Big Data, SoLoMo, Privacy, Human Machines?’ Leonhard delivered so many interesting snippets of information in his 40 minutes that only viewing of the video would do his talk justice. The premise was that technology was still dumb, but getting cleverer. Social-local-mobile (SoLoMo), a more mobile-centric version of the addition of local entries to search engine results, is one example. Human-machine interfaces (such as cash registers) were rapidly evolving and becoming commonplace. The way we communicate, get information, create, buy and sell, travel, live and learn were all changing. Was it only a matter of time until there was a Wikipedia implant for Alzheimers patients? Everything was going from stuff to bits, and being stored in the cloud– money, education, music and, of course, data. The explosion of data, or the data economy as some like to refer to it, may be worth a trillion dollars in the next few years. Data were the currency of the digital world and we needed to do better things with it than we have done with oil. Data spills were becoming more regular, we should almost see Facebook as public. However, while Facebook knew what you were saying, Google truly knew what you are thinking. We share more private information with Google than we do with our nearest and dearest.

What will define human in five years from now? As a warning Leonhard quoted Tim O’Reilly: "If you take out more than you put in the ecosystem eventually fails”. Rather than letting technology think for us it should support us, design and emotions have become the real assets. Brands use intangibles to sell; likes, loves and sentiments, for example ‘likenomics’ – the requirement that we ‘like’ something. We need to explore the idea of the 'networked society' and our becoming more interdependent. Issues of climate change should drive us too. The future of the Internet and of nature require that we be networked or die. We should move from an egosystem (centralised, scheduled, monolithic, proprietary, owned) to an ecosystem (distributed, real-time, networked, interoperable, shared). We need to embark on sense making, we need better curators and there needs to be more humanisation of technology.

New Frontiers in Information Management

From Record to Graph

Richard Wallis, Technology Evangelist, OCLC, UK explained that Libraries have been capturing data in some shape or other for a long time: hand-written catalogue cards, Dewey, to Machine-Readable Catalogue (MARC) records. They have also been exchanging and publishing structured metadata about their resources for decades. Libraries were fast to move onto the Web: online OPACs began emerging from 1994, and they were also keen to use URIs and identifiers. However as they move into the Web of data does their history help? Libraries are like the oil tankers set on a route, it’s very hard for them to turn. A history of process, practice, training, and established use cases can often hamper a community's approach to radically new ways of doing things. OCLC’s WorldCat.org [9] has been publishing linked data for bibliographic items (270+ million) since earlier this year. The core vocabulary comes from schema.org [10], a collection of schemas co-operated by Google, Bing, Yahoo and Yandex. The linked data are published both in human-readable form and in machine-readable RDFa. WorldCat also offer an open data licence. The rest of the Web trusts library data so this really is a golden opportunity for libraries to move forward and Wallis believes that lots of libraries and initiatives will publish linked bibliographic data in the very near future.

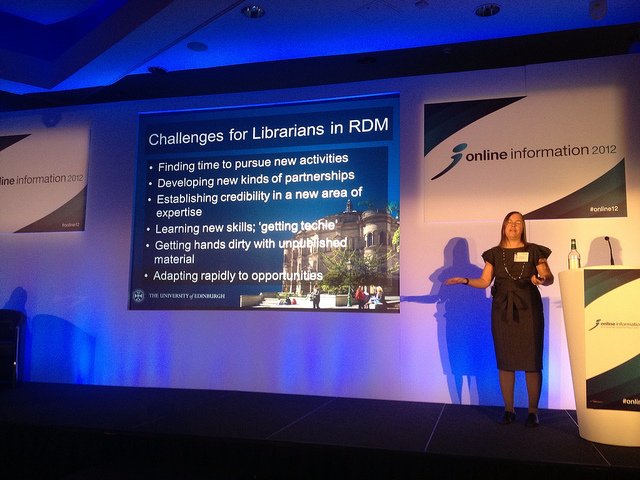

Robin Rice speaking on Building Research Data Management

Services at the University of Edinburgh: A Data Librarian's View

(Photo courtesy of Guus van den Brekel, UMCG.)

Research Data Management

A final mention goes to the Research Data Management (RDM) session, which comprised presentations by myself and Robin Rice, data librarian at the University of Edinburgh.

My talk looked at supporting libraries in leading the way in research data management [11]. RDM initiatives at several UK universities have emanated from the library and much of the Digital Curation Centre (DCC) institutional engagement work is being carried out in conjunction with library service teams. It has become apparent that librarians are beginning to carve a new role for themselves using their highly relevant skill set: metadata knowledge; understanding of repositories; direction of open sharing of publications; good relationships with researchers and good connections with other service departments. I began by looking at work carried out in understanding the current situation, such as the 2012 RLUK report Re-skilling for Research, which found that there are nine areas where over 50% of the respondents with Subject Librarian responsibilities indicated that they have limited or no skills or knowledge, and in all cases these were also deemed to be of increasing importance in the future. Similar reports have indicated that librarians are overtaxed already, lack personal research experience, have little understanding of the complexity and scale of issue. They need to gain knowledge and understanding of many areas such as researchers’ practice and data holdings, research councils and funding bodies’ requirements, disciplinary and/or institutional codes of practice and policies and RDM tools and technologies. I then took a look at possible approaches for moving forward such as the University of Helsinki Library’s knotworking activity, the Opportunities for Data Exchange (ODE) Project which shares emerging best practice and Data intelligence 4 librarians training materials at the University of Delft. I also looked at UK-based activities such as RDMRose [12], a JISC-funded project led by the University of Sheffield iSchool, to produce OER learning materials in RDM tailored for information professionals.

My overview was complemented by Robin’s University of Edinburgh case-study [13]. Edinburgh had an aspirational RDM policy passed by senate in May 2011. The policy, which was library-led and involved academic champions, has been the foundation for many other institution’s policies. Some of the key questions for the policy were: who will support your researchers’ planning? Who has responsibility during the research process? Who has rights in the data? Rice explained that when developing policy you need to be aware of the drivers from your own institution, be able to practise the art of persuasion and have high-level support. Consider the idea of a postcard from the future – what would you like your policy to contain to produce a future vision? Rice gave an overview of training work carried out in Edinburgh including the MANTRA modules [14] and tailored support for data management plans using DMPOnline. She also highlighted the University’s DataShare, an online digital repository of multi-disciplinary research datasets produced at the University of Edinburgh, hosted by the Data Library in Information Services. In her conclusion she talked about the challenges for librarians moving in to RDM: a lack of time for these activities, the need for new partnerships, establishing credibility in a new area of expertise and getting their hands dirty with unpublished material.

Conclusions

The 15-minute wrap-up session led by the programme committee was a little rushed. Floods and a bad transport situation beckoned, so people were keen to leave, but I think more attempt could have been made to pull the themes together. Big data was one of the more noteworthy themes with a dedicated strand and a number of presentations on this area. Maybe this is a relatively new phenomenon for the information professional but there could have been more consideration of what practical actions the community could take rather than just overview. Another key theme, and one for which I unfortunately attended relatively few sessions was looking at building new exciting services in the current regulatory environment (security issues, etc.) This sits alongside the need for openness and data sharing. Other emerging themes were the role of digital curation, the need to avoid defining ourselves by tools and the humanisation of technology.

I really enjoyed Online Information but at times it felt as if the information profession is one that is unsure of which direction to take. For example, I loved the idea of a conference app, they used Vivastream [15], potentially a way to connect with those at the conference interested in similar topics to you. Unfortunately the wifi at the event was flaky at best, and being in the basement meant we had little or no network connection. Many of us were willing to connect but were not able…

Most Slides from Online 2012 are available at from the Incisive Medai Slideshare account [16].

References

- Online Information 2012 http://www.online-information.co.uk

- Boing Boing blog http://boingboing.net

- Digital Economy Act http://en.wikipedia.org/wiki/Digital_Economy_Act_2010

- Team SCARLET blog http://teamscarlet.wordpress.com

- Junaio http://www.junaio.com/home/

- Google Apps for Education http://www.google.co.uk/apps/intl/en/edu/index.html

- University of Dundee, MSc in data science

http://www.computing.dundee.ac.uk/study/postgrad/degreedetails.asp?17 - MediaFuturist http://www.mediafuturist.com

- OCLC WorldCat http://www.oclc.org/worldcat/

- Schema.org http://schema.org

- Supporting Libraries in Leading the Way in Research Data Management

http://www.slideshare.net/MariekeGuy/supporting-libraries-in-leading-the-way-in-research-data-management - RDMRose http://rdmrose.blogspot.co.uk

- Building research data management services at the University of Edinburgh: a data librarian's view http://www.slideshare.net/rcrice/building-research-data-management-services-at-the-university-of-edinburgh-a-data-librarians-view

- MANTRA http://datalib.edina.ac.uk/mantra/index.html

- Vivastream http://www.vivastream.com

- Online 2012 Slides http://www.slideshare.net/Incisive_Events

Author Details

Marieke Guy

Institutional Support Officer, DCC

UKOLN

University of Bath

Email: m.guy@ukoln.ac.uk

Web site: http://www.dcc.ac.uk

Marieke Guy is an Institutional Support Officer at the Digital Curation Centre. She is working with 5 HEIs to raise awareness and build capacity for institutional research data management. Marieke works for UKOLN, a research organisation that aims to inform practice and influence policy in the areas of: digital libraries, information systems, bibliographic management, and Web technologies. Marieke has worked from home since April 2008 and is the remote worker champion at UKOLN. In this role has worked on a number of initiatives aimed specifically at remote workers. She has written articles on remote working, event amplification and related technologies and maintains a blog entitled Ramblings of a Remote Worker.